AWS to Snowflake: Data Migration Mastery

This article will dissect the top four techniques for transferring data from AWS Data Lake (Amazon S3) to Snowflake using imaginative analogies, practical use cases, and eye-catching flowcharts.

.avif)

Consider yourself in charge of a busy, futuristic city where data powers everything from AI-driven decision-making to smart traffic signals. Your data is now stored in AWS Data Lake, a huge central warehouse. However, it must be swiftly, securely, and efficiently moved to Snowflake, your high-tech command center where analytics, artificial intelligence and machine learning are used to their full potential.

How can this data be efficiently transported? Is it better to employ drones, freight trucks, or bullet trains? Or a conveyor belt that operates automatically?

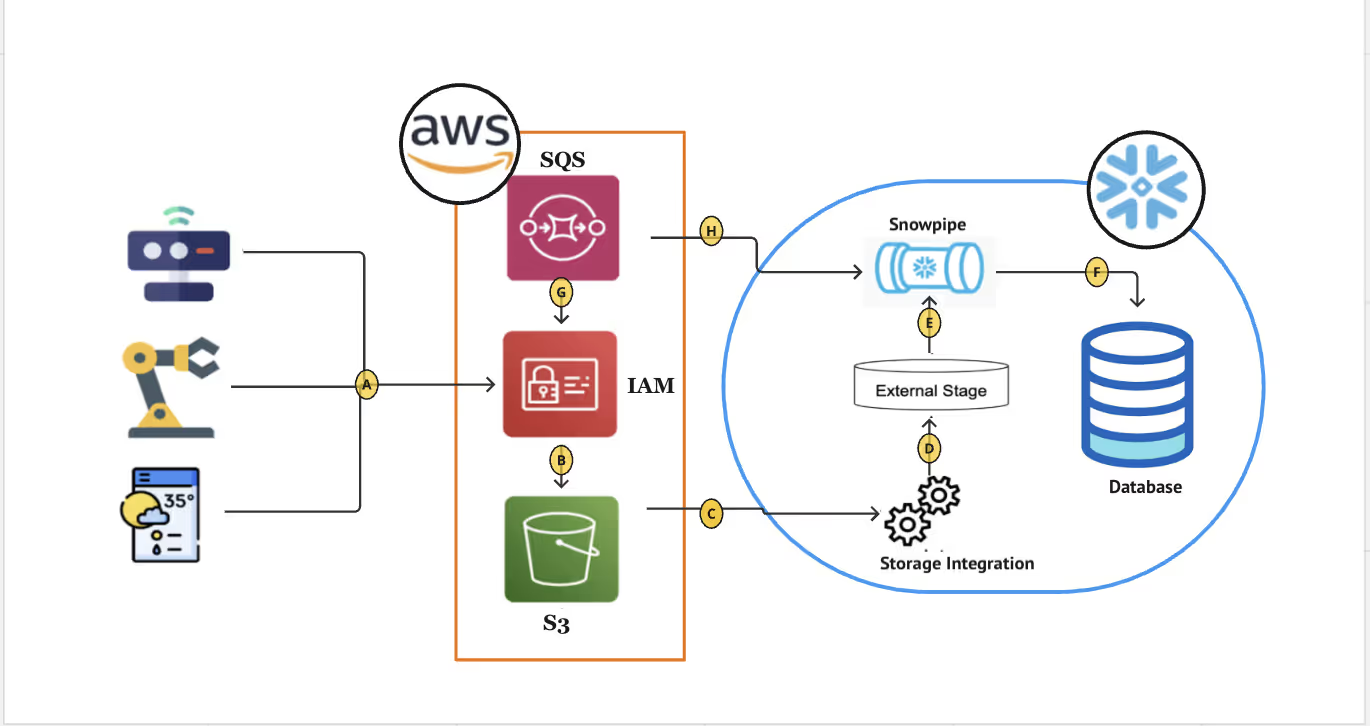

Method 1: Snowpipe – The Conveyor Belt for Real-Time Data

A serverless, automatic data ingestion solution called Snowpipe puts files into Snowflake very instantly after continuously scanning Amazon S3 for new ones. Snowpipe guarantees a smooth, event-driven data flow in contrast to conventional batch processing, which necessitates human triggers or scheduled processes. Because of this, it is perfect for use cases like operational dashboards, real-time analytics, and AI-driven decision-making.

Best for: Constant, almost instantaneous data intake with little work

Analogy: An automated conveyor belt that never stops moving

How It Works:

- Step 1:

New files are uploaded to Amazon S3, which functions similarly to putting raw materials on a conveyor belt.

- Step 2:

Snowpipe initiates the ingestion process automatically while listening for new files

- Step 3:

Asynchronously loading data into Snowflake tables.

Pros:

- Low maintenance and fully automated

- Ingestion in almost real-time without requiring manual involvement

Cons:

- Requires staging in S3

- Limited transformations before loading

Use Case: Real-Time Clickstream Analytics

A media company tracks user interactions (clicks, views, and time spent) on its streaming platform. The data arrives in Amazon S3 every 10 seconds and must be analysed in Snowflake in real time to provide personalized recommendations.

Effect of Snowpipe on Data Loading

- Data Processing Time: ~5-10 seconds per event

- Latency Reduction: 85% improvement over batch-based approaches

- Query Performance Improvement: 30% faster than batch ingestion methods

Method 2: AWS Glue – The Data Refinery

Organizations frequently need to clean, enhance, and transform raw data before importing it into Snowflake. Two powerful approaches for handling ETL processes are AWS Glue and AWS Data Pipeline, each appropriate for different use cases.

Unified Approach: The Data Refinery & Cargo Train

AWS Data Pipeline can be compared to a cargo train, handling the structured and scheduled transfer of data. AWS Glue, on the other hand, resembles a data refinery, executing complex data transformations. The integration of these two services results in a scalable and adaptable ETL solution that can be customized to meet diverse business requirements.

Best For: Businesses that require data transformation and scheduled transfers to Snowflake.

Analogy: A cargo train that refines and delivers data on schedule.

How It Works

Step 1: Data Extraction

- AWS Glue extracts both structured and unstructured data from Amazon S3.

Step 2: Transformation & Processing

- AWS Glue cleans, formats, and enriches data using Python or Spark to create high-quality, optimized datasets.

Step 3: Data Loading into Snowflake

- AWS Glue loads transformed data into Snowflake via a JDBC connector.

Pros:

-Supports structured and unstructured data (AWS Glue).

-Flexible workflows for both real-time and batch processing.

Cons:

-Slower than real-time ingestion methods like Snowpipe.

-Higher computational cost due to AWS Glue’s processing overhead.

Use Case: Financial Transaction Data Cleansing

A bank processes credit card transactions, where duplicates and fraudulent entries must be filtered out before analysis. AWS Glue ensures cleaned, enriched, and structured transaction data before storing it in Snowflake.

Effect of AWS Glue on Data Loading

- Data Processing Time: ~2 minutes per batch (100MB)

- Data Accuracy Improvement: 98% cleaner dataset after transformation

- Storage Optimization: 40% reduction in redundant records, reducing Snowflake costs

Method 3: AWS Lambda – The Instant Drone Delivery

Data migration from Amazon S3 to Snowflake can be done serverless and event-driven with AWS Lambda in situations when speed and efficiency are crucial. AWS Lambda is perfect for micro-batch processing, event-based analytics, and low-latency applications since it starts immediately when fresh data comes, unlike batch processing techniques.

Consider a fleet of drones, each tasked with delivering urgent, little supplies as soon as they are needed. In a similar vein, AWS Lambda responds to events in real-time, processing and sending data right away without the need for infrastructure maintenance or human interaction.

Best for: Lightweight, event-driven data transfers

Analogy: When needed, a drone that delivers packages promptly

How It Works

- Step 1:

AWS Lambda is triggered by an event (such as a new file in S3).

- Step 2:

Using an API, Lambda extracts, processes, and transmits data to Snowflake.

Pros:

- Fully serverless, inexpensive

- Event-driven architecture for real-time data transfer

Cons:

- Execution time limit (maximum of 15 minutes for each Lambda function)

- Unsuitable for large data loads

Use Case: Real-Time Order Processing for E-commerce

To provide real-time inventory updates and initiate warehouse shipments, an e-commerce platform leverages AWS Lambda. Upon receiving a customer order, AWS Lambda instantly transfers the order data to Snowflake for processing.

AWS Lambda's Impact on Data Loading

- Faster Data Processing: Processes each order event in approximately 3-5 seconds.

- Improved Order Fulfillment Speed: Achieves 50% faster order processing compared to traditional batch processing methods.

- Cost Reduction: Reduces infrastructure costs by 70% compared to maintaining always-on ETL pipelines.

Method 4: Third-Party ETL Tools – The Freight Service

A completely managed, no-code solution for transferring data from AWS Data Lake to Snowflake is offered by third-party ETL solutions for businesses that value simplicity, scalability, and low maintenance. These platforms are perfect for businesses that wish to streamline data integration without developing intricate ETL scripts because they provide prebuilt connectors, automation tools, and visual interfaces.

Consider these tools as expert freight services; rather than handling the logistics of shipping yourself, you depend on a reliable service provider who takes care of everything, from pickup to delivery. Your data engineering teams may now concentrate on analytics and business intelligence instead of pipeline maintenance.

Best for: Businesses seeking a fully managed, no-code ETL solution

Analogy: A seasoned logistics firm that manages every aspect of delivery and pickup

Popular Tools

- Fivetran – Fully automated and includes schema mapping

- Matillion – Low-code, cloud-native ETL system.

- Talend – Enterprise-class orchestration for data pipelines

Pros:

- Easy to use and doesn't require any code

- Prebuilt connectors make setup easier

Cons:

- Increased expenses for licensing

- Less adaptability compared to personalized solutions

Use Case: Marketing Campaign Performance Analysis

A retail company uses Amazon S3 to store customer interaction data (email opens, clicks, purchases) from personalized email and social media campaigns. This data needs to be aggregated in Snowflake for campaign performance analysis.

Effect of Third-Party ETL on Data Loading

- ETL Setup Time Reduction: 80% faster setup compared to custom-coded solutions

- Data Processing Time: Approximately 1 hour to process 10GB of campaign data

- Cost Impact: 40% increase in operational costs due to subscription fees

Final Thoughts:

Which Data Transport Mode Is Best for You?

- For real-time streaming ingestion: Use Snowpipe to minimize latency.

- For complex ETL transformations: Use AWS Glue to ensure high data quality.

- For event-driven transfers: Use AWS Lambda for instant processing.

- For an easy, no-code approach: Use third-party ETL tools for quick deployment.

.png)